Generative Adversarial Networks Part 1 - Understanding GANs

⌛ This article is quite old! This article dates back from when I was studying for my Master of Science in Machine Learning at Georgia Tech. Since then, I recentered my career away from the subject. While the subject here is interesting, I am clearly not an authoritative source on the subject and you should keep that in mind when looking at this article…

I don’t talk much about machine learning on this blog in general, having pretty much focused on web-related software engineering lately, but I do study this field at Georgia Tech. Recently, a professor of mine (Dr. Andrew Gardner) made us focus on GANs, a kind of model that I’d like to present to you today. I assume you have a basic understanding of neural networks and know a bit about Tensorflow and Keras. If you’ve only played with something like MNIST, I think this tutorial will be accessible enough for you to understand. Now that you’ve been warned, let’s get to it!

Generative Adversarial Networks or GANs is a recent unsupervised technique (introduced in 2014 by Ian Goodfellow et al.) to train neural networks to generate plausible data using a zero sum game. Hopefully, this is not as complicated as it sounds.

In this blog post I’m going to explain how GANs work in general and which applications they commonly have and in a next blogpost, I’m going to show you how we can use Keras to train such model.

Why GANs ?

Generating data can be used to perform various tasks. The first thing that comes to mind is to be able to augment any dataset used for any kind of task with plausible additional data: a popular use of GANs is to feed them the imagenet dataset and make it generate plausible pictures that do not exist in the original dataset. From a human standpoint, those images may be a bit irrealistic but they may still make sense.

| Sample of original data | Some generated images |

|---|---|

|

|

An example of generated images presented by the OpenAI team.

By looking at the generated output, we can see that the GAN managed to represent a certain understanding of our world and is able, from this understanding, to create new images that could fit in our own vision of the world.

GANs can also be used for many other interesting tasks that may seem unrelated. For instance, GANs are able to upscale an image in a plausible way, inferring details that do not exist in the original. GANs are also able to infer the next frames in videos in a realistic way. But my favorite application may be this one where they managed to transform simplistic drawings into photorealistic sceneries. Finally, GANs seems to be researched extensively by OpenAI with the goal of being able to generate seemlingly real situations for Reinforcment Learning experiments.

Model Overview

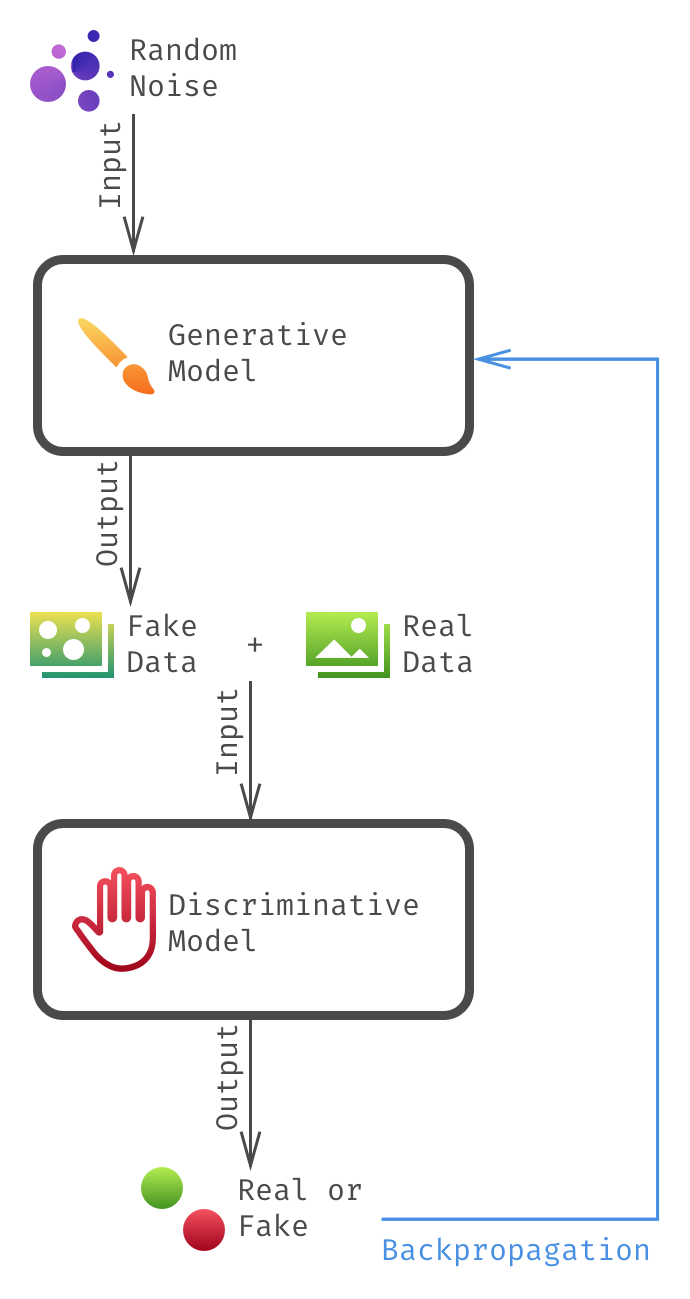

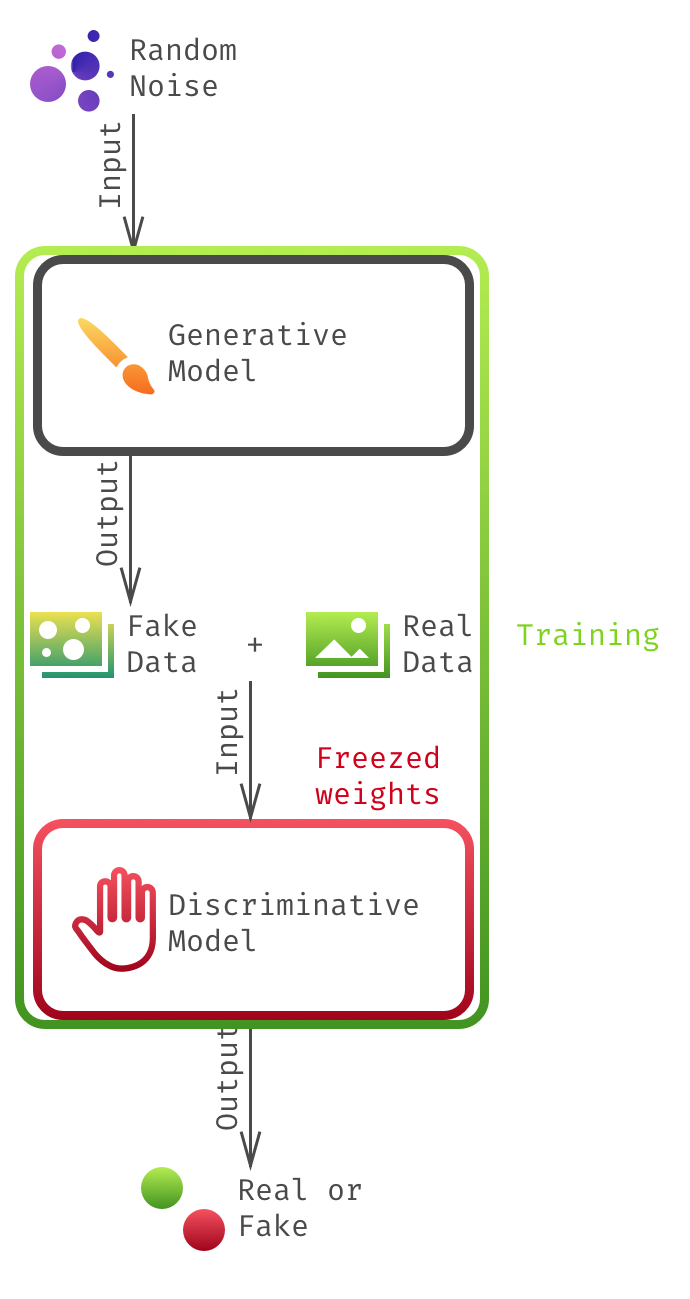

The overall idea behind GANs is that you have two models playing with each other. As Ian Goodfellow describes it: one is a counterfeiter trying to produce seemingly real data while the other is a cop trying to determine what the fake counterfeit data is while trying to not raise false positives on real data.

-

The Generative Model (aka The counterfeiter), by taking any random input will try to generate some real things (curves, images, sounds, text, …). It has no idea of what is the real data, it wll only try to adjust from the feedback of the other model.

-

The Discriminative Model (aka The cop), will take as its dataset input a bunch of generated data from the other model and actual real data we want the other model to learn to fake. The output results of this model will serve for the backpropagation of the other model.

An overview of GANs: the results of the discriminator model will serve to retrain the generator during the backpropagation.

How to train a GAN?

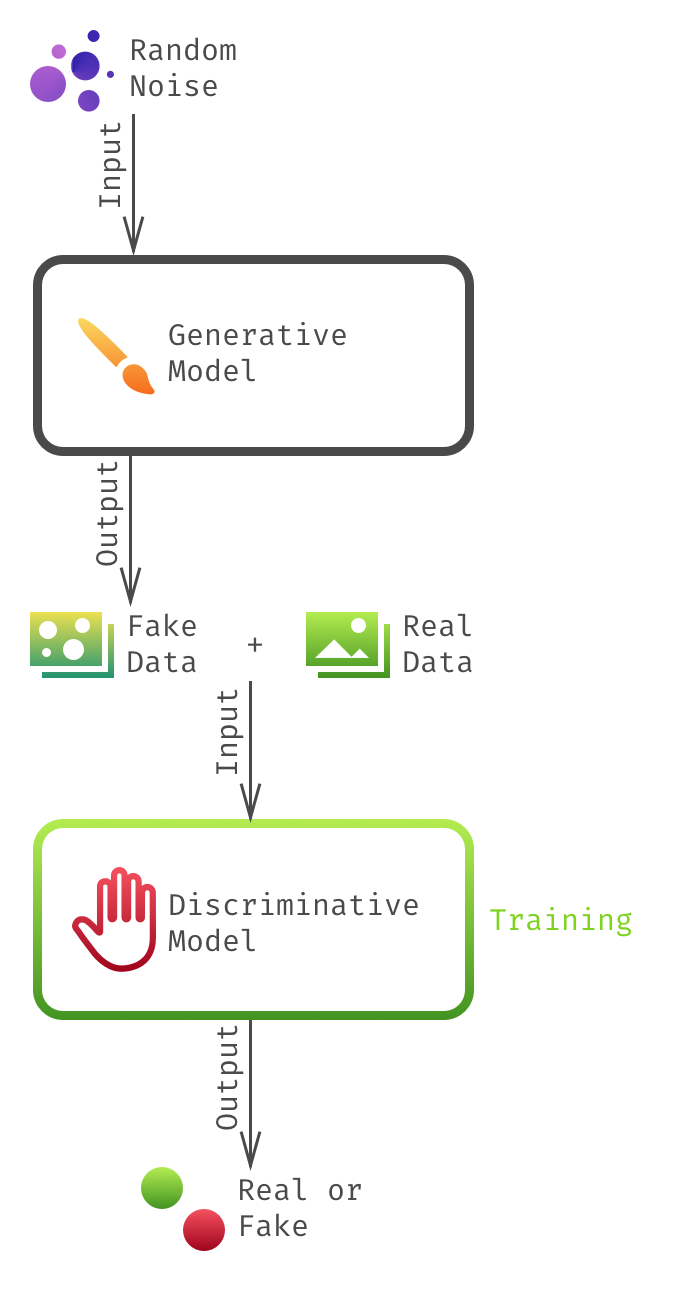

How do we train this efficiently though? We’ll see in the next part how to actually train the model with Keras but in general, training a GAN is very simple: for each epoch, you only need to do a two-step training.

| 1. Train the discriminator | 2. Train the chained GAN |

|---|---|

|

|

- Train the discriminator Sample some images from your dataset and some noise that you will pipe through the generator model. Then use this data to train the Discriminator to recognize Generator data from Real data.

- Train the generator via the chained models In this part, you will generate sample data and try to push the chained generator and discriminator to tell you that it is real data. However, we don’t want to alter the weights in the discriminator during this step. That’s why we’ll need to freeze the training of the weights in the discriminator.

What are their limits? How do they compare with similar models?

Let’s start with the main issue with GANs, it is particularly hard to quantify how good their output is: first thing, they run unsupervised so theorically the loss would be a good metric to watch, however, since GANs are in fact two models fighting with each other, they will both try to have the lowest loss while augmenting the other model loss. They also are exposed to the risk of generating very similar results for different noise input. Keep in mind that there is very good research that has been made on this subject to mitigate those issues but that goes way out of the scope of this introductory tutorial. If you are interested in learning more about this, I advise you to follow the work made recently by the University of Montréal and OpenAI (where the inventors of GANs are primarily working). I especially recommend Improved techniques for training gans.

GANs are very good for their generative abilities but are also used in tasks where other concurrent models are very strong such as latent modeling (semi-supervized approach, this time we would not feed noise into the GAN) in which Variational Autoencoders (VAEs) are already very good at.

VAEs are good at reducing dimensions into interpretable dimensions while GANs, as said before are harder to evaluate. However, GANs will, compared to VAEs, generate less “blurry” output.

There are also a lot of other models that I will not cover here since they are more specialized than GANs in specific fields (such as pixelCNN for images) that may work better. That’s why that, depending on the task, it may be interesting to combine GANs with an another model (Adversarial VAEs for instance) to get the most out of a specific situation.

Conclusion

I’ll conclude this first part by saying that GANs are a good fundamental start and, while not perfect, they can be used and combined to create very interesting models. The research goes fast and I would advise you to keep an eye out if you are interested in that. I really think there is still a lot to be done with GANs that we did not really imagine just yet! I’ll stop there since I’m also interested in showing you how to create your own GANs in the next part.